Recent statistical analysis shows that armies of bots have infiltrated Twitter, and the bot about to influence you is already inside your feed.

The use of social media bots to influence politics made splashy headlines around the 2016 election, but just how pervasive are these bots? Is your Twitter feed secretly full of bot activity rather than the meaningful human interaction that drew many to social platforms in the first place? The short answer is yes, says Soumendra Lahiri, the Stanley A. Sawyer Professor in Mathematics and Statistics, and Dhrubajyoti Ghosh, a doctoral student working with Lahiri: There are a lot more bots on Twitter than you might think.

The abundance of fake accounts — famously cited by Elon Musk as an obstacle to his acquisition of Twitter — intrudes upon the free exchange of ideas on social media, particularly when users can’t tell whether they’re talking to other organic users or manipulative bots deployed as part of targeted influence campaigns.

"To put it plainly, Twitter is being misused."

"To put it plainly, Twitter is being misused. It’s a publicly open forum, but some influential people take advantage of the openness of Twitter to try to advance their own agenda,” Lahiri said. “What we are seeing is that, depending on the issue, many non-human machines or programs participate in these conversations and oftentimes try to lead the discussion in a way that suits whoever is funding those bots.”

To understand the scope of this problem, Lahiri and Ghosh developed an algorithm to distinguish human from non- human users. Twitter has publicly claimed that the total number of false or spam accounts is a mere 5% of daily users, but Lahiri and Ghosh beg to differ. Their results suggest that bots account for the majority of Twitter users.

This veritable army of bots is being deployed in an attempt to influence social media users on a wide range of topics, but politics remains the most salient case, both at the level of individual political campaigns as well as adversarial nations portraying each other negatively.

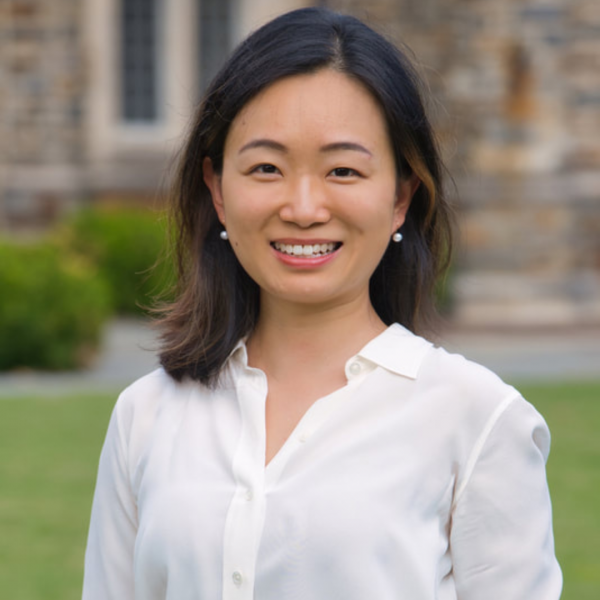

“Initially, with the rise of social media, there was a lot of panic and paranoia about how the internet was going to take over our ability to make rational decisions,” said Betsy Sinclair, professor of political science and author of The Social Citizen: Peer Networks and Political Behavior. “But that assumes you can catch politics from anyone, and that’s just not how it works.”

According to Sinclair, bots may not be very effective at influencing your political position or changing your vote, simply because bots aren’t your real friends, regardless of your connection on social media. “Unlike the coronavirus, politics is really only contagious from people that you’re likely to share a glass of water with,” Sinclair said.

However, danger still may lurk in bots’ ability to spread misinformation through unwary amplifiers. To resist these attacks, savvy users must evaluate which posts are coming from humans as opposed to those coming from machines.

Using data science and statistical methods, Ghosh and Lahiri were able to accurately classify accounts as organic or inorganic — that is, human or bot — based on their Twitter activity.

“If you look at the pattern of timestamps on tweets, you can differentiate organic users, basically just how you’d expect,” Lahiri said. “Organic users have their own daily lifestyle, so you see them tweeting during the hour after they get off work or during their lunch break. That’s one pattern of timestamps.”

“Bot behavior looks completely different under time series analysis,” Ghosh added. Because bots don’t sleep or have any other daily obligations, they display a very different pattern of behavior.

Ghosh and Lahiri identified tweet content as another important indicator that a user might be a robot masquerading as a person. Looking at how many distinct words appear in a user’s tweets can unmask bots, which tend to have limited and often-repeated vocabularies. Humans, on the other hand, tend to use about 10 times more unique words than bots in their tweets.

“Even a cleverly designed program does not have the same breadth of vocabulary words and expressions as humans do,” Lahiri explained. “Somebody programmed those bots, and whatever they put in, that’s what gets used repeatedly.”

Beyond what they say and when they say it, bots have yet another tell: whom they’re connected to. Twitter serves up content based on many factors, including how many followers a user has and how much they use the platform. According to Lahiri and Ghosh, inorganic users typically have many more followers than organic users. The creator, or general, of a bot army may get a boost in Twitter’s algorithm by spreading their content on a highly connected network, but they also give themselves away by having all their bots follow each other.

"It’s a cat and mouse game. As creators change their programs, we have to look for other features we can use to find them."

Taking these features together, Ghosh and Lahiri’s algorithm was able to identify inorganic users with up to 98% accuracy. Though their dataset was limited to about two million tweets collected over a six-week period — Twitter is unsurprisingly extremely protective of its data — the researchers are confident that their publicly available sample is a fair representation of what’s actually on Twitter. And, unfortunately for organic users, what’s actually out there is mostly bots.

Ghosh and Lahiri found that bots account for 25–68% of Twitter users, depending on the time and issues being discussed, a staggering figure for anyone who views — or is trying to sell — social media as a source for human connection.

Though social media companies could use Ghosh and Lahiri’s findings to combat bot armies, this isn’t a war that can be fought only once. As soon as inorganic users are reliably identified, programmers are hard at work on the next generation of more sophisticated bots, making them look more like organic users and necessitating even better methods of detection.

“It’s a cat and mouse game,” Lahiri said. “As creators change their programs, we have to look for other features we can use to find them.”

One such avenue for future research lies in the dynamics of bot networks, particularly how neighborhoods of inorganic users grow over time. Bot armies are already showing surprisingly advanced configurations, with central influencers surrounded by fringe users, indicating they’re evolving under the leadership of increasingly devious and sophisticated generals.

But don’t batten down the hatches and delete your social media accounts just yet. There may be some positive outcomes, even from negative or inauthentic online discourse.

“We do see people engaging with each other on social media in some meaningful ways, such as genuine deliberation or debate; it’s not all just vitriol and unpleasant comment sections,” said Sinclair. “It’s also important to remember that weak social ties — people who pop up in your feed now and again, someone who lives across the country who you don’t see regularly, anyone you don’t know very well — they really don’t influence you. Your strong friends who regularly like and comment on your posts and actually appear with you in pictures, they’re your true influencers.”

The bot armies may be vast, but populist rhetoric could pose a bigger threat to democracy. For more information about how Arts & Sciences researchers approach social media, read about how political scientists Jacob Montgomery, Margit Tavits, and Christopher Lucas intend to harness Twitter data to study the rise of populist rhetoric.